The Californian firm is caught in astorm of criticismetof incomprehensionafter announcing the implementation of new content monitoring tools. In particular, they want to allow the Californian to identify any image of a child pornography nature and thus identify the users sharing this content, a practice that is obviously illegal, both in France and in the United States and elsewhere in the world.

If Apple's desire may initially be of good intention, with this fight for child protection, many are worried about the potential abuses of what appears to be afirst step towards mass surveillance of users.

So, to try to reassure, the company recently published aFAQ or frequently asked questionsspecially focused on this new child protection system. Here's what we learned there.

What tools are we talking about?

Apple specifies in its FAQ that this new child protection system is in fact underpinned by two tools, therefore not to be confused: the warning against sexual content in iMessage and the detection of child pornography content in iCloud , nicknamed CSAM for Child Sexual Abuse Material.

Sexual Image Warning Tool

Exclusive to iMessage photos

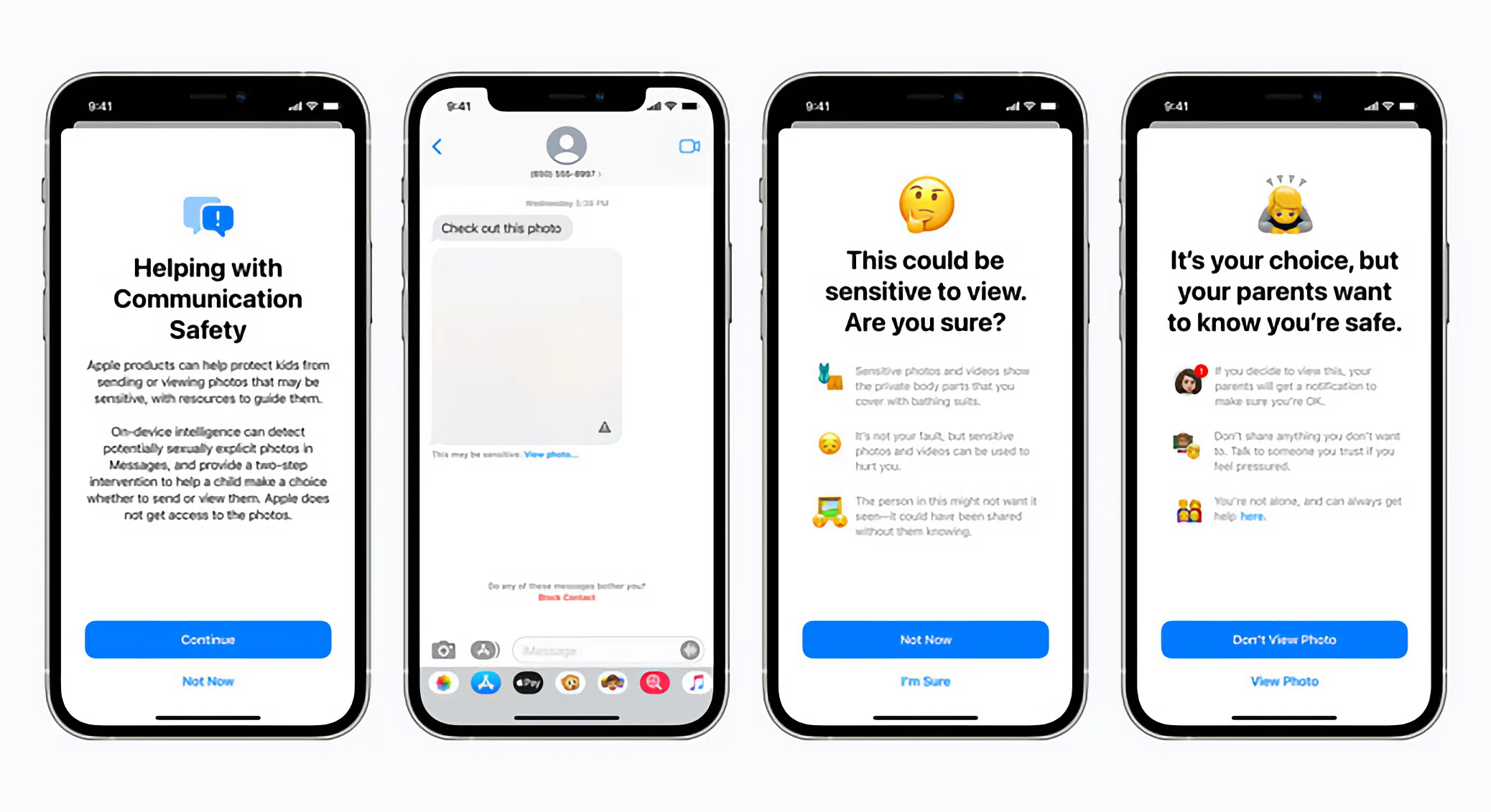

As stated above, the first of the two tools is a mechanism for warning of sexual images. It focuses on images sent by iMessage, with analysis donelocally, on the machine. He is active onany child account configured via Family Sharingand can be deactivated by the user who has the rights, generally a parent. It is intended to be used to warn children before they access a photo of a sexual nature that could shock them. When active, the tool blurs any sensitive photo and the child is warned. An option also allows you to notify a parent when a child views an image identified by this tool.

© Apple

As Apple specifies, this tooldoes not call into question the end-to-end encryption underlying iMessage. Thus, at no time does the company access the contents of iMessage exchanges. The analysis of the image is done only from the device used to open the image, at the end of the exchange, and locally, remember.

Additionally, Apple saysdo not share any sexual image detection information in this context to any authority.

CSAM child pornography content detection tool

The second tool is a mechanism for detecting child pornography images. It is only active on iCloud photos. See details below.

What content is analyzed by the CSAM?

The analysis of CSAM content is not carried outas in the photosand not the videos. Furthermore,only photos uploaded to iCloudare taken into account. If you don't use iCloud backup, your photos won't go through Apple's child pornography checker tool.

CSAM does not scan photos from your local photo library on iPhone or iPad, or iMessage content. There is therefore no violation of the end-to-end encryption of the Apple text service. As for privacy on iCloud, that's another question.

Can a human view iCloud images?

Not systematically. An image of a child pornography nature is detected on a user's iCloud space using artificial intelligence and a comparison made with already existing image banks from the NCMEC (National Center for Missing and Exploited Children ) and other child protection associations.

Apple also specifies in this sense that thefalse positives are extremely rare: less than 1 per billion per year, according to the firm.

Once an image has been detected by the AI, it is then exposed to human analysis to validate or invalidate the detection. Apple guarantees that the innocent have nothing to fear.

What are the consequences for a positive detection?

If after human analysis, an iCloud account has been considered to contain child pornography images, the NCMEC is notified. The consequences following this alert to the NCMEC are not known, however. The competent authorities may subsequently be notified to manage the file and the identified user.

An expansion to other content in the future?

The Californian firm assures that its system was built to focus only on child pornography content. In addition, it specifies that it will refuse any government request to add other content to the detection system. For the Cupertino giant, this is only a matter of detecting child pornography images, nothing else.

An extension to third-party services?

As MacRumors reports, Apple aims for this detection of child pornography images to also be deployed on third-party tools. We think, for example, of WhatsApp, Instagram or even Google Photos. But to date, this is not yet the case. Only photos on iCloud are currently affected by CSAM analysis.

Siri and Spotlight also used

Apple indicates that Siri and Spotlight search will also be able to participate in this fight for child protection, by displaying more practical information on the subject during Siri requests and Spotlight searches from the iPhone and iPad.

© Apple

Active tools from when?

These sexual and child pornography image detection features will be implemented in iOS 15, iPadOS 15, macOS Monterey and watchOS 8 and later versions, at a date still unknown.

i-nfo.fr - Official iPhon.fr app

By : Keleops AG