Googlerecently shed light on details about one of its most ambitious projects of the decade:Gemini, which is developed in three variants,Nano, Pro, et Ultra. The firm presented the latest variant asthe most powerful AI language model on the market, by praising him in particularresults superior to GPT-4on several performance tests.

She has also published nearly twenty videos on this subject on her channelYouTube, some of which show the supposed prowess ofGemini. A way toAlphabetto flex your muscles in the face of the dazzling success ofOpenAI.However, it seems that Google faked one of these demonstrationsin order to arouse the amazement of the spectators. A strategy that seems to have worked perfectly given the virality that the video obtained. It has more than two million views, whilein the twentiesthat publishedGoogleaboutGemini,only 5 exceed 200,000 views. Figures that are a little low compared to the 11 million subscribers that the firm's channel has on its own platform.

“Breathtaking”, yes, but no

The “faked” video is the one that shows the ability toGemini Proto describe what he sees. The video appears to show a human interacting live with the AI. The individual draws, then asks orally toGeminito describe what he has just drawn. Gemini quickly responds by describing precisely what he sees. Which quickly caused Internet users to react, with some describing the video as “breathtaking” on the social network [X]. We'll share it with youagain:

Google admits deception

At first glance, we can quickly believe in feats that AI is not yet capable of achieving. But reading the description of the video, we quickly realize that there is nothing exceptional: “For the purposes of this demo, latency has been reduced and Gemini outputs have been shortened for brevity”. In other words,Googlechanged the speed at which the AI responds by adding voice and video,which obviously suggests that this is a live interactionwithGemini, who hears and looks.

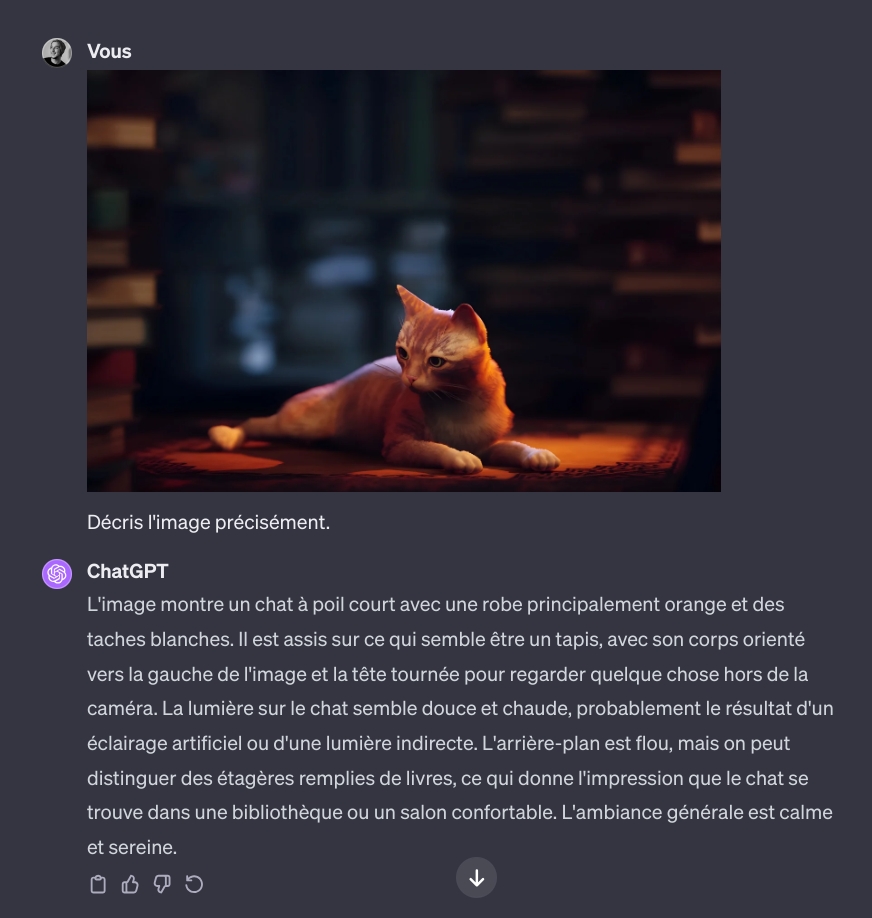

In reality, the firm only obtained precise descriptions in “using still images from the sequence and prompts via text“, according to what was reportedBloombergby a spokesperson for the group. Which therefore differs greatly from what the video might suggest. This is not exceptional given that, in fact,GPT4can also achieve this, proof below. I asked toGPT–4to describe to me the cover photo ofour article on the game Stray, which was recently released on Mac, here is the result:

© iPhon.fr / Sami Trabcha

As you can see the description is precise and has nothing to envy of what Google shows.Concerning performance tests aboveGPT-4, it is good to remember that this is a finished project for over a year already, and thatGooglestruggled to surpass it by a few points on most criteria, and did not succeed on some.

On the one hand, some analysts believe that given the small score gap between the two,there is no evidenceas to the fact that in real use it is greater thanGPT-4given that other criteria come into account. And on the other hand, whileOpenAIdevelopedGPT-5for a while now,Googlebarely better thanGPT-4, which is undoubtedly much less efficient than its little brother will be.

See also:

i-nfo.fr - Official iPhon.fr app

By : Keleops AG